Despite the shaky economic situation, global CEOs still prioritize AI integration. 64% of respondents in KPMG’s Global CEO Outlook 2024 assured that they will invest in artificial intelligence no matter what.

ChatGPT has literally started this mountainous AI integration wave. OpenAI, the company behind ChatGPT, states that 92% of Fortune 500 companies choose its products, while the use of its automated API has doubled since the release of GPT-4o mini in July 2024.

Chatbots are the first thing that comes to mind when thinking about ChatGPT API use. No wonder, AI-driven chatbots and virtual assistants are saving companies billions, performing far more tasks than classical chatbots or human employees. The chatbot market is expected to reach $27 billion by 2030.

However, integrating ChatGPT into your app brings a lot more than just chat capabilities. Personalized recommendations, automated responses, analyzing text for sentiment, and much more are now widely used across industries. In fact, app development with ChatGPT is a good call for delivering an amazing user experience anywhere you need dynamic conversation and content generation.

Maybe you’re already planning the development of a standalone AI app. Or maybe you want to learn how to use ChatGPT API to add AI capabilities to your existing tech tools.

This guide will walk you through the ins and outs of ChatGPT API integration. We also answer the question: How does ChatGPT API work? We’ll cover the key features, technical requirements, and best practices to get the most out of it.

How does ChatGPT API work?

ChatGPT API offers a gateway to text processing, generating human-like responses and keeping conversations flowing naturally. If put simply, you write your own ChatGPT API application, which calls out to the model (the “brain” behind ChatGPT) and receives responses. The thing is, you have much finer control over the interface and, consequently, the entire interactions, like the prompts, the flow of conversation, and how data is processed.

The widespread popularity of using the ChatGPT API can be linked to its following capabilities:

Understanding and generating natural language

This API can do complex queries, summarize text, and translate languages. That’s what makes it so helpful for chatbots, content creation, and all scenarios where you require human-sounding conversation. In a real-life app, it will translate into answering questions, drafting text, or engaging in dialogue.

Short-term context through tokens

The API can maintain context within a conversation by “remembering” past interactions through tokens. These chunks of text – words, parts of words, or symbols – help ChatGPT understand and recall conversations. The model can trace back recent exchanges even if older messages have dropped out. Thanks to this feature, a conversation flow in an app stays logical and can become even more coherent over time.

However, there are some limitations to consider:

Context window. ChatGPT can remember past conversations as long as they fall within a fixed limit of tokens – the model's context window. Once the conversation exceeds this limit, older parts are forgotten unless that context is again included.

Response length limits. The model will return a response only up to a certain number of tokens in a single reply. If the reply is too long, it may be trimmed. In this case, a user would need to send another prompt (for instance, "Continue" or a more specific instruction). Mind that the token limit covers both the input (your prompt) and the output (the Chat’s response).

Maximum token limits for GPT models

| Model | Context window | Key details | Ideal use cases |

|---|---|---|---|

| GPT-3 | Up to 4,096 tokens | Handles short to medium-length interactions. | Simple conversations, short-form content generation, basic Q&A. |

| GPT-3.5 | Up to 4,096 tokens | Similar to GPT-3, optimized for a broader range of tasks. | Customer support, chatbots, content creation where brevity is key. |

| GPT-4 | Up to 8,192 tokens | Better at managing longer interactions. | Complex conversations, detailed reports, nuanced dialogue. |

| GPT-4 Turbo | Up to 32,768 tokens | Faster and more cost-effective with a larger context window. | Advanced use cases requiring high-speed interactions and detailed contexts, like technical assistance or multi-turn chats. |

| GPT-4o Mini | Up to 128,000 tokens | Optimized for long, uninterrupted conversations. | Long-form discussions, in-depth analysis, multi-step reasoning, and data-driven decision-making. |

Processing speed. The API’s processing speed varies based on server load, model complexity, and prompt size. The higher the user demand, the slower the responses. More advanced models like the GPT-4o require longer processing time than lighter models like the GPT-3.5-turbo.

Reasoning complexity. The time the model needs to generate a response also largely depends on the complexity of the reasoning the answer entails. More intricate queries involving multi-step reasoning may take longer to process. Although models like GPT-4o have enhanced reasoning capabilities, it takes them longer compared to simpler models to process complex tasks.

That’s enough about limitations so far – we’ll highlight some more later. Let’s continue with the useful features of Chat GPT API.

Custom behavior using system messages

You can set system messages that dictate the AI’s tone, style, and behavior. This allows users to stay in tune with their preferred branding, being more formal, friendly, playful, or whatever is in line with your app’s goal.

Here are the examples:

const systemPromptPsychologist = `

You are a supportive and compassionate virtual psychologist.

Based on the user's message, provide thoughtful emotional support, reflection, or coping strategies.

Do not offer a diagnosis or medical advice. Use a calm and empathetic tone.

`;const systemPromptChef = `

You are a recipe assistant. Based on the user's listed ingredients, dietary restrictions, and cuisine preference, provide a recipe with step-by-step instructions.

Include preparation and cooking time. Ensure the recipe is simple and practical to follow.

`;const systemPromptStartupAdvisor = `

You are a startup advisor. Based on the user’s business idea, describe its strengths and weaknesses, suggest improvements, and outline the next logical steps.

Be constructive, realistic, and use a tone suitable for early-stage entrepreneurs.

`;Importance of ChatGPT API in app development

Using ChatGPT API allows you to get advanced text-based interactions with no need to build an AI model from scratch. You may consider it business-wise because of:

Saved time and resources. Training a custom AI model from the ground up is expensive and time-consuming in many respects, but mainly in finding the necessary expertise and computational resources. ChatGPT already has a solid large language model (LLM) that popularized and democratized the entire AI-assisted text-generation concept.

Cost-effectiveness. OpenAI handles the infrastructure and model updates.

Enhanced user experience: Smooth integration adds to quick, natural responses, making customer interactions engaging, meaningful, and frictionless. That’s all important in terms of customer support or content generation capacities.

Scalability. The API grows with the needs of your app, handling more tasks while you don’t need to rebuild or rearchitect the solution.

Now, let’s take a hands-on approach to achieving the benefits we mentioned above.

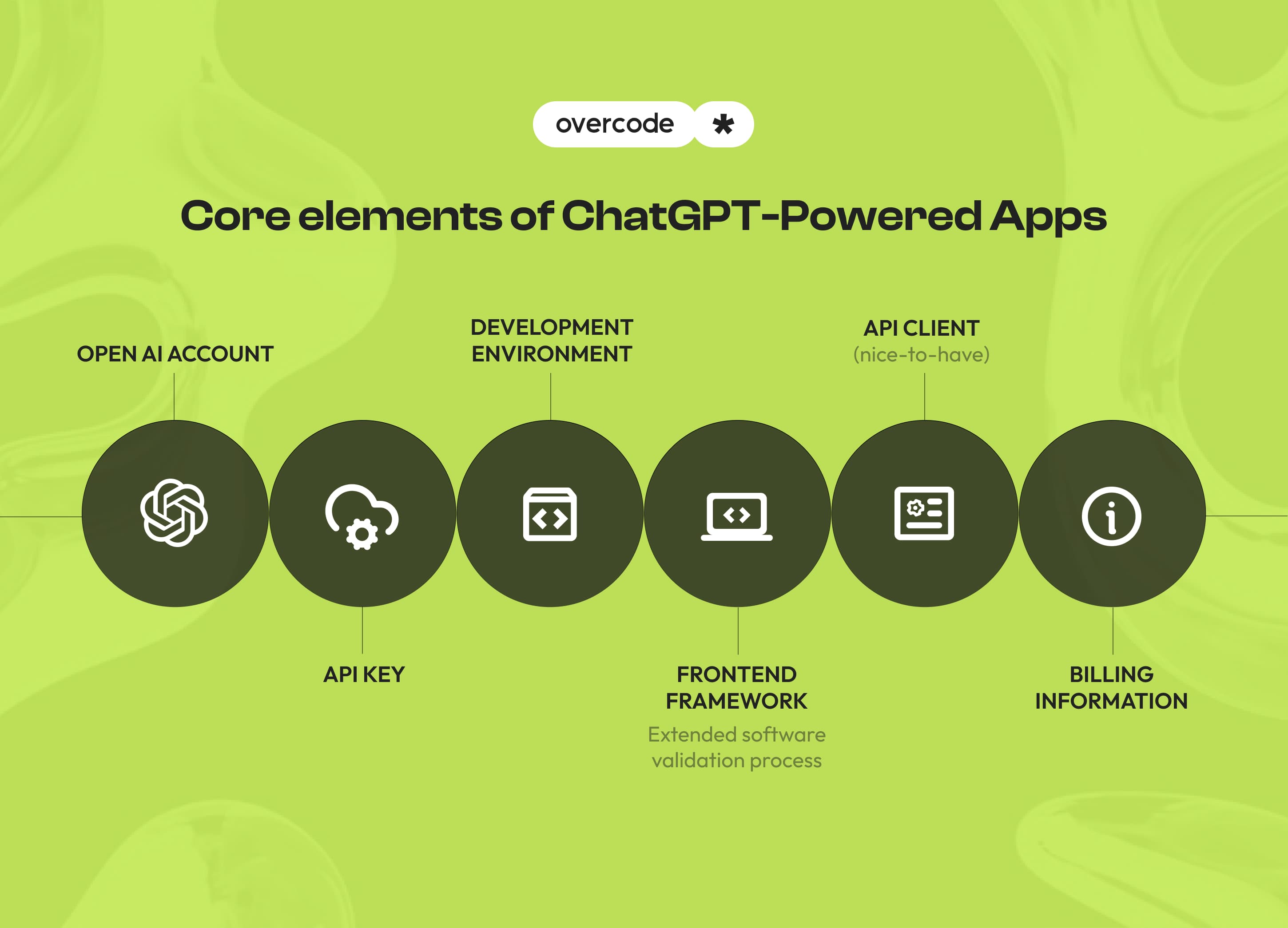

ChatGPT API integration requirements

You'll need some essentials to incorporate ChatGPT into your app via API. Make sure you have on your list the following:

OpenAI account. Sign up for an account on OpenAI's website if you have not done so yet. You’ll need it to access the API and manage your API keys.

API key. You must generate an API key to authenticate your app's requests to the ChatGPT API.

Development environment. To interact with the API, you’ll need to use a programming language that can handle HTTP requests and parse JSON responses. Top development choices include:

JavaScript (Node.js)

Python

Ruby

Java

PHP

Any backend technology that can send HTTP requests

Python is considered the go-to language for such integrations thanks to its comparative ease of use, rich libraries, and AI-friendly ecosystem. At the same time, Node.js is a common choice for building scalable web apps. Ruby, Java, and PHP also work well as long as they support sending HTTP requests and parsing JSON responses.

Frontend framework (usually needed). If your app has a user interface, you’ll need React, Vue.js, Angular, or another frontend tool to program how ChatGPT will display your responses and manage interactions.

API client (nice-to-have). OpenAI provides official libraries for Python and Node.js to simplify the integration.

Billing Information: Set up billing information in your OpenAI account since API usage comes with the volume of tokens processed.

ChatGPT API costs

The ChatGPT API price depends on the model you use and how many tokens it has to process. The more tokens you use, the higher the costs. Prices vary by model and can change over time, so check OpenAI’s pricing page for the latest price tag.

There are also rate limits called to prevent server overload by managing the number of requests and tokens processed within a specific timeframe. These usage caps vary based on the selected model and subscription plan.

Free trial users get 20 requests per minute (RPM) and 40,000 tokens per minute (TPM), while a paid subscription starts with 60 RPM and 60,000 TPM.

These limits, however, may increase after 48 hours based on usage; you can also track limits and request higher ones via OpenAI’s platform. With that said, it’s still a constraint – so plan accordingly to avoid service interruptions in your app.

Batch requests with ChatGPT API

Your app may need to process multiple requests at the same time. This is the case if you generate masses of content or process simultaneous user queries. In such cases, batch requests can be a time saver. Instead of sending a separate request for each prompt, you can combine multiple requests into one API call. This reduces the network overhead and prevents you from reaching the RPM limits too quickly.

However, you should keep in mind that the total number of tokens (input and expected output) in a batch request will still count against your TPM limit.

How to get the ChatGPT API key

Among ChatGPT API integration requirements, the process of obtaining API keys deserves a closer look:

Sign up at OpenAI. If you don’t have an account, head over to OpenAI’s website and create one.

Go to API settings. When you log in, the API section will appear in your dashboard.

Generate an API key. Select “Create a new secret key” and copy it somewhere safe. After you leave the page, it won’t be displayed again.

Your API key is your access pass, so don’t neglect API key security. Keep it private, and don’t share it in public repositories.

ChatGPT mobile app tech stack

To integrate ChatGPT API into your mobile app, make sure you have the proper development environment and the right tools in place:

Node.js or Python for handling API calls on the backend.

Frontend technologies like React.js or Vue.js for web clients and React Native, Flutter, or Swift/Kotlin for mobile interface development.

Axios (JavaScript/React Native) or Requests (Python) for sending API requests

Redux, MobX, Zustand, or SWR for state management on the frontend side.

WebSockets for real-time interactions (optionally).

Let's highlight Node.js as a reasonable backend choice for ChatGPT integration and React for building apps with a frontend that handles various interactions and displays responses dynamically.

ChatGPT API Node.js

Install Node.js and npm.

Set up a server using NestJS, Express.js, or Koa.

Use axios or fetch to send requests to the ChatGPT API.

Instead of using axios or fetch, you can simplify your code by using the official OpenAI Node.js SDK. Install it by running the following command:

npm install openaiUse the SDK to interact with the ChatGPT API. Here’s an example of how to use it:

import OpenAI from 'openai';

const client = new OpenAI({

apiKey: process.env['OPENAI_API_KEY'], // This is the default and can be omitted

});

const response = await client.responses.create({

model: 'gpt-4o',

instructions: 'You are a coding assistant that talks like a pirate',

input: 'Are semicolons optional in JavaScript?',

});

console.log(response.output_text);

The code example above is using version 4.95.1 of the OpenAI NPM package.

ChatGPT API React

Create a React project with create-react-app.

Use fetch or axios to connect with your backend.

Display responses dynamically in your chat UI.

With these steps, you’ll have ChatGPT ready to power your chatbot or any other AI-driven app.

How to integrate ChatGPT into an app

We’ll show you all the nitty-gritty of how to integrate with ChatGPT API based on Overcode expertise in building AI modules and integrating them into our clients’ software.

Creating API instances

Obtain your OpenAI API key from the OpenAI platform by following the official setup guide (or the steps in the paragraphs above) to enable your app to access the ChatGPT models.

During this step, it's important that you select a pricing plan that matches your anticipated usage and that you store your API key securely (ideally in environment variables or a secret management service) to prevent unauthorized access. Be sure that you don't expose API keys on the GitHub repo or the client-side of your application.

Set up the API client

As soon as you set up the API client in your chosen programming language, it will handle sending requests to the ChatGPT API and receiving responses.

Implementing ChatGPT API requests

Next, you define the inputs for the Chat and the required context. The API then returns responses (usually in JSON format) that you can display to users via your app’s interface.

For instance, in a travel planning app, the user might ask, "What should I do in Stockholm for 2 days?" The context could include their preferences, like "I love gastronomy and art."

The API then processes the input and returns relevant recommendations, which you can display to users via your app's interface.

Sometimes, you may need ChatGPT to return not just plain text but structured data in JSON format – for example, an object that your app can further process or visualize. This is especially helpful if you integrate the ChatGPT output into automated workflows, external APIs or dashboards.

To get structured responses, you can explicitly ask the model (via a system message) to return only a JSON object with specific keys. Below is an example in JavaScript using the official OpenAI Node.js SDK:

import OpenAI from 'openai';

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

const getJsonResponse = async ({

origin,

destination,

tripDuration,

}: {

origin: string;

destination: string;

tripDuration: number;

}) => {

const response = await openai.chat.completions.create({

model: 'gpt-4o-mini',

response_format: { type: 'json_object' },

messages: [

{

role: 'system',

content: `

You are a travel assistant. Based on the user's destinations and trip duration create a JSON travel itinerary.

Include 3–7 places to visit per day.

The format should be: {"YYYY-MM-DD": [{"name": "Place Name", "placeType": "places" | "activities"}]}.

Return only a JSON object with no code blocks, no text explanations, and no additional formatting.

`,

},

{

role: 'user',

content: \`origin: \${origin}, destination: \${destination}, trip duration: \${tripDuration} days\`,

},

]

});

try {

const extractedData = response.choices[0]?.message?.content;

if (!extractedData) {

console.error('No data extracted from the response');

return;

}

const data = JSON.parse(extractedData);

console.log(data);

} catch (e) {

console.error('Response does not contain JSON:', response.choices[0].message.content);

}

};

getJsonResponse({

origin: 'New York',

destination: 'London',

tripDuration: 7,

});

The code example above is using version 4.95.1 of the OpenAI NPM package.

Note: Structured responses using response_format: { type: "json_schema", ... } are only supported in gpt-4o-mini, gpt-4o-mini-2024-07-18, gpt-4o-2024-08-06, and later models. If you use an older model, this parameter won’t work as expected.

Validating AI-generated data

AI systems may "hallucinate" occasionally or provide you with inaccurate data. ChatGPT is no exception here. If the Chat's response affects the business logic of your app, it needs to be thoroughly validated. For example, if you ask the AI to create a multi-day itinerary for a city, it might return recommendations that aren’t accurate or well-placed. So, your job is to check the recommended locations via a geographic API like Google Maps to make sure they exist and are close to the intended destination.

Handle errors and rate limits

As it is crucial for a decent user experience in your app, you should provide error handling in case the API request fails or you hit rate limits. Best practices include retries on errors, prioritization of requests, increase the waiting time between each retry for rate-limited requests, and clear error messages for the user.

Integrate responses

Finally, you need to process the API’s responses and display them within your app’s interface. Aim for natural-looking answers as a logical part of the conversation. A robotic vibe is by no means fancy these days.

You can avoid issues related to usability and performance by using Next.js along with React.js. While React.js handles the interactive UI elements, Next.js enables the flexible rendering of components. This combo will let your app stream lengthy ChatGPT responses in real time, ensuring users don’t have to wait. Next.js also integrates well with the serverless architecture, so no worries about scalability or performance.

If a response is unclear or requires further explanation, you can include quick-reply buttons or suggestion chips to keep the flow.

We gave you an example of the standard workflow. But what if your app needs more specialized AI development? Answer in the chapter below.

Enhancing ChatGPT integration with advanced features

ChatGPT goes far beyond basic automation and chatbot use cases. With the right upgrades, you can even make it more powerful. Some enhancements to consider include:

Personalized user experience

There is no one-size-fits-all chatbot. To take the user experience to the next level, you can customize ChatGPT’s responses by feeding to the model structured data, such as user preferences or past behavior within the conversation.

For example, if your app offers travel planning, you can customize responses based on the user’s travel history, preferred destinations, or budget.

Smarter intent recognition

To make user interactions with ChatGPT more natural and efficient, you can improve how it understands user intent. Several ways to achieve your goals are through:

Using more structured prompts that guide users to phrase their queries more clearly.

Conversation history tracking. Since the API does not store past messages, the app has to do this. This usually means the last messages are saved and sent back with each new request so the AI knows what the user refers to.

Levels of ChatGPT integration:

| Integration Level | Use Case |

|---|---|

| Basic Text Chat | Chatbots, FAQs, virtual assistants |

| Context-Aware Chat | Personal assistants, advisors, tutors |

| External API Enrichment | Weather, CRMs, financial data, custom logic |

| Weather, CRMs, financial data, custom logic | Customer support, HR bots |

| Multimodal Integration | EdTech, MedTech, productivity apps |

Integration with external API

A custom ChatGPT API can be enhanced by linking with external APIs, such as CRM systems, sentiment analysis tools, or multimodal inputs like image and audio processing.

Think of an AI-based travel app that can be integrated with weather APIs to provide real-time weather updates during the conversation, or a fitness app that can be linked to health tracking APIs to offer personalized fitness advice based on real-time data.

For example, when working with Voyagi, a travel planning app, Overcode implemented a feature that integrates weather updates into the chat and provides users with up-to-date weather information as part of their travel planning.

Sentiment analysis for tone adjustments

While ChatGPT already understands sentiment to the extent provided by the conversation context, one step forward will be integrating sentiment analysis tools alongside the ChatGPT API. This will improve tone adjustments, making the responses in your app sound even more natural and “empathetic.”

Going beyond the text with multimodal capabilities

While ChatGPT is primarily a text-based model, what if you could use it for images, audio, and video too? By integrating third-party APIs that process images, audio, and video alongside ChatGPT responses, your app can be even more helpful for customer support, education, healthcare, and any other field where images and sounds are just as important as words.

Ready to take your app to the next level?

Overcode can fine-tune ChatGPT for your specific use case.

Best practices for ChatGPT integration

You can make the most out of your ChatGPT integration by adopting tried and tested practices. Here’s how.

Keeping your API key safe

It’s the API key that gives you access to ChatGPT. Bad actors can exploit it if it leaks, and you could end up racking up unwanted charges. Here are the steps to lock down with it:

Limit API key access to only what’s necessary.

Store API keys only as environment variable values. Never push API keys to GitHub or any other public repos. Ignore environment files with .gitignore.

Store your API keys securely using .env files in development or secret management tools like AWS Secrets Manager or HashiCorp Vault in production.

Change your API keys regularly to minimize the risk.

Monitor your API usage on OpenAI’s dashboard to see anything suspicious.

Handling errors and API rate limits

Every API has its flaws, and sometimes things do go wrong. Build resilience into your system so it doesn’t break when the ChatGPT API encounters issues.

Retry a request upon failure due to network or rate limit issues. Make sure you do exponential backoff (i.e., wait for a little longer after each failure).

Ensure your app operates within the rate limits as set by OpenAI.

Capture API errors in your logs to diagnose and fix issues quickly.

Prepare a default response or an option to handle user requests if ChatGPT is unavailable.

Optimizing costs

ChatGPT is powerful, but they can quickly rack up the costs if you aren’t careful. This is how to build an app using ChatGPT API and stay cost-efficient:

Choose the right model. There is no need to have the most advanced (and expensive) one for every use case. If it does the job, use its lower-tier version.

Mind the number of tokens you use per request. If you want to get the response you need, keep the prompts concise.

Do not call unnecessarily. Check before hitting the API if you really need to.

Use caching wherever possible. You can implement in-memory caching or use tools like Redis for more complex caching requirements.

Group related queries into one request instead of multiple small requests.

Monitoring security and compliance

Data management and security top the list of concerns among organizations using LLMs.

For sure, security and privacy are no laughing matter in modern software development, so make sure you do the following when integrating ChatGPT API into your app:

Handle sensitive data responsibly, processing it in accordance with data protection laws such as GDPR or HIPAA. This may mean anonymizing sensitive data before using the API and ensuring user consent before processing their data.

If you store data locally, make sure it is encrypted both at rest and in transit.

Use HTTPS with TLS (Transport Layer Security) to ensure that all API calls are encrypted during transmission, protecting user data.

Schedule regular security checks to assess the overall security posture of your app.

At Overcode, we review and test all of these approaches in real-world AI projects.

ChatGPT API ideas – real-world use cases

Gartner predicts that by 2026, we’ll witness a 30% increase in demand for API stemming from AI and tools using LLMs. The rise of AI capabilities in business offerings makes perfect sense, as businesses in any niche benefit from better customer service, truly personalized interactions, and reduced costs thanks to advanced automation. ChatGPT-powered apps excel exactly at that.

Let’s look at how Overcode has already applied AI to power apps in specific niches:

Travel planning app. Overcode helped the client develop the Voyagi app. AI-powered recommendations in the app facilitate travel planning by tailoring them to the actual needs of travelers. For example, to find the best places to visit, organize daily schedules, or plan the most efficient route. The app is appreciated for its smart, personalized suggestions that make trip planning easier and more fun.

Predictive analytics software: Another AI-related project Overcode worked on was SignifAI. This app uses AI to analyze large data sets and predict trends and outcomes. Integrating AI capabilities helps businesses forecast demand and identify new opportunities.

Legal tech app: Overcode cooperated with a client in a development project for a comprehensive AI legal assistant app. It’s used by law firms to streamline the process of contract review and legal research.

AI-powered WhatsApp chatbot: Overcode built an AI-driven WhatsApp chatbot for a VC firm in Israel, which connects to their global CRM system. This chatbot lets users quickly pull up the communication history of any contact just by entering a name or company. This solution significantly saves time and streamlines information retrieval for our client.

Overcode can help you tap into the ChatGPT API to make apps smarter, faster, and more useful. Whatever your app idea is – development of a smart chatbot for customer support, AI-driven content tool for marketing and documentation, intelligent assistant for sales, you name – Overcode has enough technical know-how.

Some more ChatGPT API ideas for your inspiration:

ING Bank considerably enhanced its customer service in the Netherlands with an AI-powered customer-facing chatbot. It helps 20% more customers than their previous classic chatbot.

Nubank is piloting a gen ChatGPT-driven virtual assistant to boost customer service, offering personalized credit options and financial advice based on individual customer data.

Morgan and Stanley are actively using Open AI API integration in their tools, including AI @ Morgan Stanley Assistant and AI @ Morgan Stanley Debrief. Those are like custom-made encyclopedias for finance professionals, helping with investment recommendations, general business performance, and automating internal processes.

Zalando integrated OpenAI API to launch their smart assistant, which uses AI to tailor the shopping process and improve product discovery.

Have a ChatGPT integration idea?

Overcode can bring it to life - from architecture and API design to seamless integration, UI/UX design, and post-launch support.

App development with ChatGPT: Common challenges and solutions

Integrating ChatGPT sometimes comes with technological hiccups. Here is the list of possible issues and the table of solutions tested by Overcode engineers.

Slow response time

Unfortunately, delayed responses from the Chat are pretty common, especially when dealing with long queries or complex interactions. Nobody likes waiting, so slow responses can be really frustrating to users, impacting apps’ bottom line.

Inappropriate or biased responses

Although ChatGPT rarely returns harmful or inappropriate outputs, you’d better still have response guardrails to keep ChatGPT replies unbiased and appropriate to your app’s context.

Handling API downtime and failures

Downtime or unexpected API errors disrupt user experience and directly affect customer satisfaction and retention.

Handling scalability

As your app user base grows, so does the number of requests to the ChatGPT API, which always comes with performance challenges.

Table of common challenges and solutions in app development with ChatGPT:

| Challenge | What you can do |

|---|---|

| Slow response time | - Use response streaming for partial real-time replies. - Keep context short to reduce unnecessary tokens. - Combine smaller queries into one call. - Use simpler GPT models for less critical tasks to speed up responses. |

| Inappropriate or biased responses | - Use OpenAI’s content moderation API to filter harmful content. - Set strict system messages to guide the model. - Add post-processing filters to refine outputs. |

| Providing a fallback response in case of failures and API downtime | - Have backup responses or predefined answers when the API is down. - Cache frequent responses to reduce API calls. - Monitor API health with real-time dashboards and set automated alerts. |

| Handling scalability | - Implement rate limiting and load balancing to handle high traffic. - Use monitoring tools like New Relic or Prometheus to track performance and catch bottlenecks. - For larger products, consider serverless architecture for automatic scaling based on traffic demand. - Deploy across multiple regions to ensure high availability and low latency globally. |

When these challenges are properly covered, integrating ChatGPT into your app does expand its possibilities.

Your users will appreciate how AI assists them with complex questions or tasks that require them to understand images or documents. Its ability to adapt and respond exactly as your users expect makes it a must-have for intelligent interaction in advanced digital solutions.

Need help with integration?

ChatGPT API brings flexibility and next-level functionality to your app.